In regards to the Fermi Paradox there is a belief common in transhumanist circles that the lack of ‘obvious’ galactic colonization is strong evidence that we are alone, civilization is rare, and thus there is some form of Great Filter. This viewpoint was espoused early on by writers such as Moravec, Kurzweil, and Hanson; it remains dominant today. It is based on an outdated, physically unrealistic model of the long term future of computational intelligence.

The core question depends on the interplay between two rather complex speculations: the first being our historical model of the galaxy, the second being our predictive model for advanced civilization. The argument from Kurzweil/Moravec starts with a type of manifest destiny view of postbiological life: that the ultimate goal of advanced civilization is to convert the universe into mind (ie computronium). The analysis then precedes to predict that properly civilized galaxies will fully utilize available energy via mega-engineering projects such as dyson spheres, and that this transformation could manifest as a wave of colonization which grows outward at near the speed of light via very fast replicating von-neumann probes.

Hundreds of years from now this line of reasoning may seem as quaint to our posthuman descendants as the 19th century notion of martians launching an invasion of earth via interplanetary cannons. My critique in two parts will focus on: 1.) Manifest Destiny Transhumanism is unreasonablely confident in its rather specific predictions for the shape of postbiological civilization, and 2.) the inference step used to combine the prior historical model (which generates the spatio-temporal prior distribution for advanced civs) with the future predictive model (which generates the expectation distribution) is unsound.

Advanced Civilizations and the Physical Limits of Computation

Imagine an engineering challenge where we are given a huge bag of advanced lego-like building blocks and tasked with organizing them into a computer that maximizes performance on some aggregate of benchmarks. Our supply of lego pieces is distributed according to some simple random model that is completely unrelated to the task at hand. Now imagine if we had unlimited time to explore all the various solutions. It would be extremely unlikely that the optimal solutions would use 100% of available lego resources. Without going into vastly more specific details, all we can say in general is that lego utilization of optimal solutions will be somewhere between 0 and 1.

Optimizing for ‘intelligence’ does not imply optimizing for ‘matter utilization’. They are completely different criteria.

Fortunately we do know enough today about the limits of computation according to current physics to make some slightly more informed guesses about the shape of advanced civs.

The key limiting factor is the Landauer Limit, which places a lower bound of (kT ln 2) on any computation which involves the erasure of one bit of information (such as overwriting 1 bit in a register). The Landauer Principle is well supported both theoretically and experimentally and should be non-controversial. The practical limit for reliable computing is somewhat larger: in the vicinity of 100kT, and modern chips are already approaching the Landauer Limit which will coincide with the inglorious end of Moore’s Law in roughly a decade or so.

The interesting question is then : what next? Moving to 3D chips is already underway and will offer some reasonable fixed gains in reducing the Von Neumman bottleneck, wire delay and so on, but it doesn’t in anyway circumvent the fundamental barrier. The only long term solution (in terms of offering many further order of magnitude increases in performance/watt) is moving to reversible computing. Quantum computing is the other direction and is closely related in the sense that making large-scale general quantum computation possible appears to require the same careful control over entropy to prevent decoherence and thus also depends on reversible computing. This is not to say that every quantum computer design is fully reversible, but in practice the two paths are heavily intertwined.

A full discussion of reversible computing and its feasibility is beyond my current scope (google search: “mike frank reversible computing”); instead I will attempt to paint a useful high level abstraction.

The essence of computation is predictable control. The enemy of control is noise. A modern solid-state IC is essentially a highly organized crystal that can reliably send electronic signals between micro-components. As everything shrinks you can fit more components into the same space, but the noise problems increase. Noise is not uniformly distributed across spatial scales. In particular there is a sea of noise at the molecular scale in the form of random thermal vibrations. The Landauer Limit arises from applying statistical mechanics to analyze the thermal noise distribution. You can do a similar analysis for quantum noise and you get another distinct, but related limit.

Galactic Real Estate and the Zones of Intelligence

Notice that the Landauer Limit scales linearly with temperature, and thus one can get a straightforward gain from simply computing at lower temperatures, but this understates the importance of thermal noise. We know that reversible computing is theoretically possible and there doesn’t appear to be any upper limit to energy efficiency – as long as we have uses for logically reversible computations (and since physics is reversible it follows that general AI algorithms – as predictors of physics – should exist in reversible forms).

The practical engineering limits of computational efficiency depend on the noise barrier and the extent to which the computer can be isolated from the chaos of its surrounding environment. Our first glimpses of reversible computing today with electronic signalling appear to all require superconducting, simply because without superconducting wire losses defeat the entire point. A handful of materials superconduct at room temperature, but for the most part its a low temp phenomenon. As another example, our current silicon computers work pretty well up to around 100C or so, around which failures become untenable. Current chips wouldn’t work too well on venus. Its difficult to imagine an effecient computer that could work on the surface of the sun.

Now following this logic all the way down, we can see that 2.7K (the cosmic background temperature) opens up a vastly wider space of advanced reversible computing designs that are impossible at 270K (earth temperatures), beyond the simple linear 100x efficiency gain. The most advanced computational intelligences are extraordinarily delicate in direct proportion. The ideal environment for postbiological super-intelligences is a heavily shielded home utterly devoid of heat(chaos).

Visualizing temperature across the universe as the analog of real estate desirability naturally leads to a Copernican paradigm shift. Temperature imposes something like a natural IQ barrier field that repulses postbiological civilization. Life first evolves in the heat bath of stars but then eventually migrates outwards into the interstellar medium, and perhaps eventually into cold molecular clouds or the intergalactic voids.

Bodies in the Oort Cloud have an estimated temperature in the balmy range of around 4-5K, and thus may represent the borderline habitable region for advanced minds.

Dark Matter and Cold Intelligences

Recent developments concerning the dark matter conundrum in cosmology can help shed some light on the amount of dark interstellar mass floating around between stars. Most of the ‘missing’ dark matter is currently believed to be non-baryonic, but these models still leave open a wide range of possible ratios between bright star-proximate mass and dark interstellar mass. More recently some astronomers have focused specifically on rogue/nomadic planets directly, with estimates ranging from around 2 rogue planets per visible star[1] up to a ratio of 100,000 rogues to regular planets.[2] The variance in these numbers suggests we still have much to learn on this question, but unquestionably the trend points towards a favorably large amount of baryonic mass free floating in the interstellar medium.

My current discussion has focused on a class of models for postbiological life that we could describe as cold solid state civilizations. Its quite possible that even more exotic forms of matter – such as dark matter/energy enable even greater computational efficiency. At this early stage the composition of non-baryonic dark matter is still an open problem and its difficult to get any sense for the probability that it turns out to be useful for computation.

Cold dark intelligences would still require energy, but increasingly less in proportion to their technological sophistication and noise isolation (coldness). Artificial fusion or even antimatter batteries could provide local energy, ultimately sourced from solar power harvested closer to the low IQ zone surrounding stars and then shipped out-system. Energy may not even be a key constraint (in comparison to rare elements, for example).

Cosmological Abiogenesis Models

For all we know our galaxy could already be fully populated with a vast sea of dark civilizations. Intelligence and technology far beyond ours requires ever sophisticated noise isolation and thermal efficiency which necessarily corresponds to reduced visibility. Our observations to date are certainly compatible with a well populated galaxy, but they are also compatible with an empty galaxy. We can now detect interstellar bodies and thus have recently discovered that the spaces between stars are likely teeming with an assortment of brown dwarfs, rogue planets and (perhaps) dark dragons.

In lieu of actually making contact (which could take hundreds of years if they exist but deem us currently uninteresting/unworthy/incommunicable), our next best bet is to form a big detailed bayesian model that hopefully outputs some useful probability distribution. In a sense that is what our brains do to some approximation, but only with some caveats and gotchas.

In this particular case we have a couple of variables which we can measure directly – namely we know roughly how many stars and thus planetary systems exist: on the order of 10^11 stars in the milky way. Recent observations combined with simulations suggest a much larger number of planets, mostly now free-floating, but in general we are still talking about many billions of potentially life-hospitable worlds.

Concerning abiogenesis itself, the traditional view holds that life evolved on earth shortly after its formation. The alternative is that simple life first evolved .. elsewhere (exogenesis/panspermia). The alternative view has gained ground recently: robustness of life experiments, vanishing time window for abiogenesis on earth, discovery of organic precursor molecules in interstellar clouds, and more recently general arguments from models of evolution.

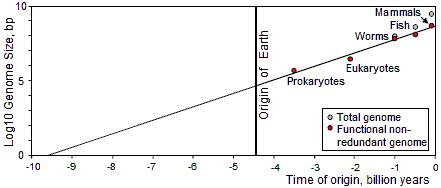

The following image from “Life Before Earth” succinctly conveys the paper’s essence:

Even if the specific model in this paper is wrong (and it has certainly engendered some criticism) the general idea of fitting genomic complexity to a temporal model and using that to estimate the origin is interesting and (probably) sound.

What all of this suggests is that life could be common, and it is difficult to justify a probability distribution over life in the galaxy that just so happens to cancel out the massive number of habitable worlds. If life really is about 9 billion-ish years old as suggested by this model it changes our view of life evolving rarely and separately as a distinct process on isolated planets to a model where simple early life evolves and spreads throughout the galaxy with a transition from some common interstellar precursor to planet-specialized species around 4 billion years ago. There would naturally be some variance in the time course of events and rate of evolution on each planet. For example if the ‘rate of evolution’ has a variance of 1% across planets – that would correspond to a variance of about 40 million years for the history from prokaryotes to humans.

If we could see the history of the galaxy unfold from an omniscient viewpoint, perhaps we’d find the earliest civilization appeared 100 million years ago (2 standard devs early) and colonized much of the high value real estate long before dinofelis hunted homo habilis on earth.

In light of all this, the presumptions behind the Great Filter and the Fermi ‘Paradox’ become less tenable. Abiogenesis is probably not the filter. There still could be a filter around the multicellular transition or linguistic intelligence, but not in all models. Increasingly it looks like human brains are just scaled up hominid brains – there is nothing that stands out as the ‘secret sauce’ to our supposedly unique intelligence. In some of the modern ‘system’ models of evolution (of which the above paper is an example) the major developmental events in our history are expected attractors, something like the main sequence of biological evolution. Those models all output an extremely high probability that the galaxy is already colonized by dark alien superintelligences.

Our observations today don’t completely rule out stellar-transforming alien civs, but they provide pretty reasonable evidence that our galaxy has not been extensively colonized by aliens who like to hang out close to stars and capture most of that energy and or visibly transform the system. In the first part of the article I explored the ultimate limits of computing and how they suggest that advanced civilizations will be dark and that the prime real estate is everywhere other than near stars.

However we could have reached the same conclusion independently by doing a Bayesian update on the discrepancy between the high prior for abundant life, the traditional Stellar Engineering model of post-biological life, and the observational evidence against that model. The Bayesian thing to do in this situation is infer (in proportion to the strength of our evidence) that the traditional model of post-biological life is probably wrong, in favor of new models.

So, Where are They?

The net effect of the dark intelligence model and our current observations is that we should update in favor of all compatible answers to the fermi paradox, which namely include the simple “they are everywhere and have already made/attempted contact”, and “they are everywhere and have ignored us”.

As an aside, its interesting to note that some of the more interesting SETI signal candidates (such as SHGb02+14a) appear to emanate from interstellar space rather than a star – which is usually viewed as negative evidence for intelligent origin.

Seriously considering the possibility that aliens have been here all along is not an easy mental challenge. The UFO phenomenon is mostly noise, but is it all noise? Hard to say. In the end it all depends on what our models say the prior for aliens should be and how confident we are in those models vs our currently favored historical narrative.